Solving Data Quality Issues in AI Systems (2025 Update)

In the rapidly evolving landscape of artificial intelligence, data quality stands as the cornerstone of success or failure. As we look ahead to 2025, addressing AI data quality challenges has become more critical than ever. Without high-quality data, even the most sophisticated algorithms are rendered ineffective. This article dives deep into understanding these challenges, exploring proven strategies to overcome them, and examining how innovation and ethics will shape the future of AI data management.

Introduction to Data Quality in AI Systems

Artificial intelligence thrives on data—vast amounts of it. But here's the catch: the quality of that data determines whether AI systems deliver transformative insights or catastrophic errors. Inconsistent, biased, or incomplete datasets can derail even the most promising projects.

As industries increasingly rely on AI for decision-making, the urgency to address AI data quality challenges has never been greater. By 2025, the sheer volume of data generated by IoT devices, cloud platforms, and third-party APIs will only compound the problem. Organizations must act now to ensure their AI systems are built on a foundation of reliable, accurate, and ethical data.

Understanding AI Data Quality Challenges

Inconsistent Data Sources

Imagine piecing together a puzzle where every piece comes from a different set. That's what working with inconsistent data feels like. Fragmented data streams from IoT sensors, legacy systems, and third-party APIs often lack standardization. For instance, one system might record timestamps in UTC, while another uses local time zones. These discrepancies create chaos during data integration, leading to unreliable outputs.

The solution? Harmonizing data sources through robust preprocessing pipelines. However, this is easier said than done, especially when dealing with real-time data streams that demand immediate attention. Addressing these inconsistencies is one of the primary AI data quality challenges organizations face today.

Data Bias and Fairness

Bias in AI training data isn't just an inconvenience—it's a ticking time bomb. When datasets disproportionately represent specific demographics, the resulting models perpetuate systemic inequalities. For example, facial recognition systems trained predominantly on lighter-skinned individuals have historically struggled to identify darker-skinned faces accurately.

Eliminating bias requires vigilance at every stage of the data lifecycle. From collection to labeling, each step must be scrutinized to ensure fairness. Yet, this remains a daunting task, as biases often lurk unnoticed until they manifest in flawed predictions.

Missing or Incomplete Data

Gaps in datasets are like missing pieces in a jigsaw puzzle—they leave the picture incomplete. Missing values can arise from human error, sensor malfunctions, or simply because the data was never collected. In healthcare AI, for instance, incomplete patient records could lead to incorrect diagnoses or treatment recommendations.

To mitigate this issue, advanced imputation techniques and anomaly detection tools are essential. However, these methods are not foolproof and require careful calibration to avoid introducing new inaccuracies.

The Impact of Poor Data Quality on AI Outcomes

Poor data quality doesn't just affect AI performance; it has far-reaching consequences. Flawed predictions can lead to costly business decisions, regulatory fines, and loss of consumer trust. Consider autonomous vehicles: if the underlying data fails to account for rare weather conditions, accidents may occur, tarnishing public perception of self-driving technology.

Moreover, compliance risks loom large. As governments worldwide tighten regulations around data privacy and transparency, organizations must ensure their AI systems adhere to ethical standards. Failure to do so could result in hefty penalties and reputational damage. Clearly, resolving AI data quality challenges isn't optional—it's imperative.

Proven Strategies to Enhance Data Quality

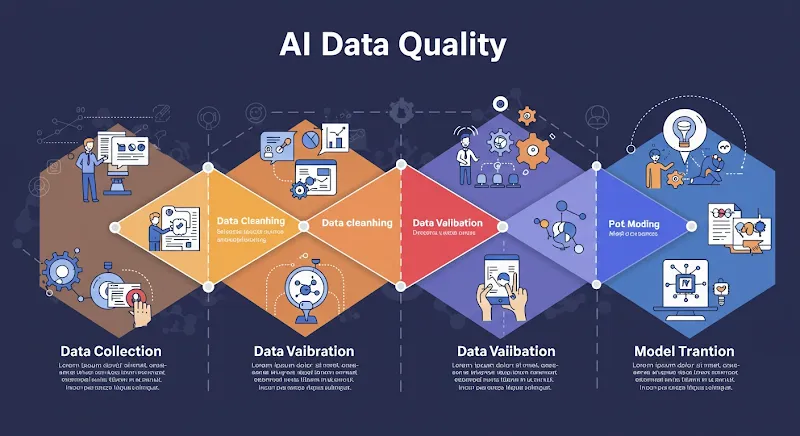

Data Cleaning and Preprocessing Techniques

Cleaning data is akin to preparing soil before planting seeds. Deduplication removes redundant entries, normalization ensures consistent formatting, and outlier detection identifies anomalies that skew results. Tools like Apache NiFi and Trifacta streamline these processes, enabling teams to preprocess data efficiently.

However, automation alone isn't enough. Human oversight remains crucial to validate automated findings and make judgment calls when ambiguity arises. Combining machine precision with human intuition yields the best outcomes.

Implementing Data Governance Frameworks

Effective data governance establishes clear policies for data validation, metadata management, and audits. By defining who owns which datasets and how they should be handled, organizations reduce the risk of misuse or mismanagement.

For example, financial institutions often implement strict governance frameworks to comply with anti-money laundering laws. These frameworks not only enhance data quality but also foster accountability across departments.

Leveraging Automation for Data Quality Management

Automation is revolutionizing data quality management. AI-driven tools such as auto-tagging and anomaly detection monitor datasets in real time, flagging issues before they escalate. Predictive analytics takes this a step further by forecasting potential problems based on historical trends.

Take retail inventory management, for instance. Automated pipelines continuously update stock levels, reducing errors caused by manual entry. Such innovations not only save time but also improve accuracy—a win-win for businesses grappling with AI data quality challenges.

Case Studies: Overcoming Data Quality Hurdles

Healthcare AI Improving Diagnostic Accuracy

A leading hospital network recently overhauled its diagnostic AI system by curating high-quality datasets. They partnered with medical professionals to label images meticulously, ensuring consistency and accuracy. The result? A 30% improvement in early-stage cancer detection rates.

This case underscores the importance of investing in data curation. Without high-quality inputs, even cutting-edge algorithms falter.

Retail AI Reducing Inventory Errors

A global retailer faced frequent stockouts due to outdated inventory tracking systems. By implementing automated data pipelines, they reduced errors by 40% within six months. Real-time updates allowed managers to restock shelves promptly, boosting customer satisfaction and sales revenue.

These examples demonstrate how targeted efforts to address AI data quality challenges yield tangible benefits.

Future Trends in AI Data Management (2025 and Beyond)

AI-Driven Data Quality Tools

Looking ahead, predictive analytics and self-healing datasets will redefine data management. Self-healing systems automatically detect and correct errors without human intervention, minimizing downtime and enhancing reliability.

Ethical Data Handling Practices

As AI becomes ubiquitous, ethical considerations will take center stage. Balancing innovation with privacy and fairness requires transparent practices and robust safeguards. Organizations that prioritize ethics will build stronger relationships with consumers and regulators alike.

Conclusion: The Path to Reliable AI Systems

Addressing AI data quality challenges is no longer optional—it's a necessity. From tackling inconsistent data sources to implementing robust governance frameworks, every step counts toward building reliable AI systems. As we march toward 2025, leveraging automation and embracing ethical practices will pave the way for a brighter, smarter future.

By prioritizing data quality, organizations can unlock the full potential of AI, driving innovation while fostering trust. After all, the journey to excellence begins with clean, accurate, and ethical data.

Table: Common AI Data Quality Challenges and Solutions

| CHALLENGE | IMPACT | SOLUTION |

|---|---|---|

| Inconsistent Data Sources | Flawed integrations, unreliable outputs | Standardize formats, use preprocessing tools |

| Data Bias | Systemic inequalities, inaccurate models | Audit datasets, diversify sample groups |

| Missing or Incomplete Data | Gaps in insights, incorrect predictions | Use imputation techniques, anomaly detection |